Understanding RAG: Retrieval-Augmented Generation in Modern AI

Introduction to RAG

Retrieval-Augmented Generation (RAG) represents a significant advancement in the field of artificial intelligence, combining the power of large language models with external knowledge retrieval systems. This innovative approach enhances the capabilities of AI by allowing it to access and utilize up-to-date information from various sources.

How RAG Works

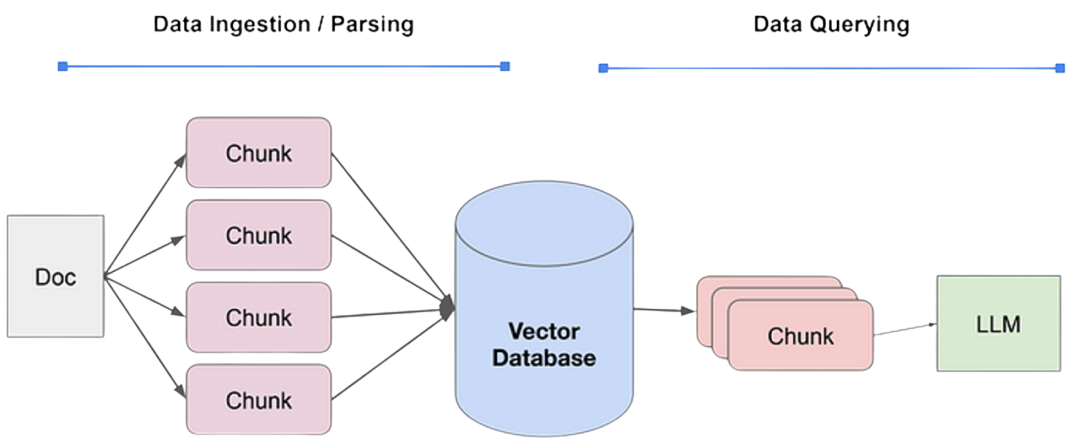

RAG operates through a two-step process:

- Data Ingestion / Parsing: The system ingests and indexes documents to create a structured knowledge base for efficient querying.

- Data Querying: This step includes data retrieval and answer generation, where the system searches through the indexed knowledge base to find relevant information based on the input query and combines it with the language model's inherent knowledge to generate accurate and contextual responses.

Vector Database

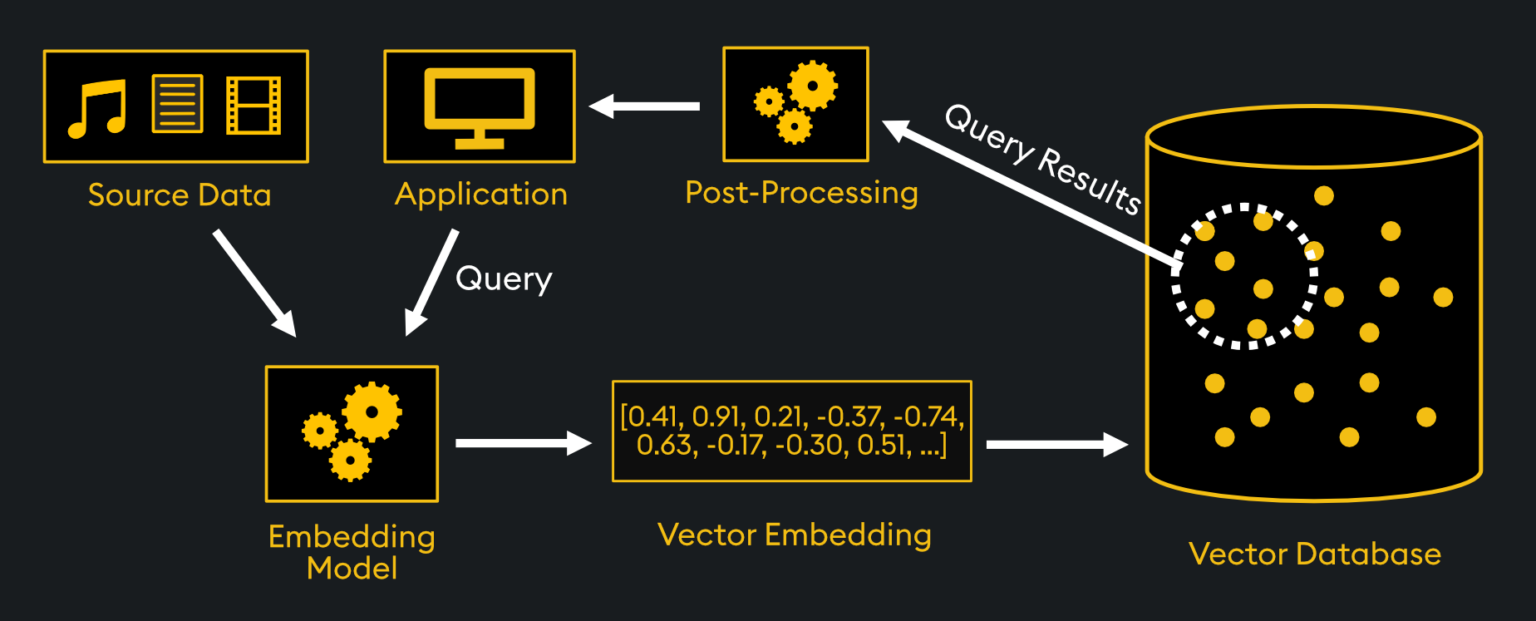

A Vector Database is a specialized database designed to store and efficiently query high-dimensional vector data. In the RAG (Retrieval-Augmented Generation) process, the Embedding Model generates vector representations, known as Vector Embeddings, that capture the semantic information and relationships within the source data. The Vector Database then stores these Vector Embeddings, allowing the system to quickly find the most relevant information for a given query.

When a query is made, the query itself is also represented as a vector. The Vector Database can then efficiently search through the stored vectors to identify the most similar or relevant data points, based on vector distance or similarity measures. This enables the system to retrieve the most relevant information from the knowledge base, which can then be used in the post-processing and generation steps to produce accurate and contextual responses. The key benefits of using a Vector Database in the RAG process include fast retrieval, semantic awareness, scalability, and flexibility, making it a crucial component in enhancing the capabilities of the language model.

Key Benefits of RAG

Reduced hallucination in AI responses

Hallucination, or the generation of plausible-sounding but factually incorrect information, is a common issue in language models. RAG systems can mitigate this by grounding their responses in the retrieved information from the knowledge base, rather than relying solely on the language model's own generation capabilities. This helps ensure that the responses are more truthful and evidence-based.Improved transparency and source attribution

RAG systems can provide users with information about the sources and provenance of the data used to generate the responses. This transparency can help build trust and credibility, as users can better understand the reasoning behind the system's outputs. Source attribution also allows users to further explore or verify the information provided, if desired.

For example, Perplexity.AI, a conversational AI assistant, provides users with information about the sources of the content it generates. When Perplexity.AI provides a response, it includes citations to the relevant sources, allowing users to easily verify the information or explore the topic further. This level of transparency helps to establish trust and accountability, as users can see the evidence behind the system's outputs.

Real-World Applications

RAG has found successful applications in customer support systems, exemplified by Thomson Reuters:

Thomson Reuters utilizes Retrieval-Augmented Generation (RAG) to enhance its customer support services. By integrating RAG, the company can efficiently retrieve relevant information from its extensive knowledge base and generate tailored responses to customer inquiries.

- Improved response times through quick retrieval of pertinent data.

- Personalized interactions that address specific customer needs.

- Increased customer satisfaction due to accurate and context-aware answers.

For more details, you can read the full article on this implementation at

Better Customer Support Using Retrieval-Augmented Generation (RAG) at Thomson Reuters.

Future Perspectives

The future of RAG technology looks promising, with potential developments in several key areas:

- More sophisticated retrieval mechanisms: Advanced algorithms will enhance the precision and relevance of retrieved information. For more insights, check out this article on:

Recent Advancements in Information Retrieval (2025). - Better integration with multimodal data: Future RAG systems may combine text, images, and other data types to provide richer and more context-aware responses. Learn more about this trend in:

Multimodal AI: Bridging the Gap Between Vision, Language, and Reality.